Important Notice

This opportunity is only open to students from Durham University.

We are excited to announce that we have secured funding from EPSRC to run student internships through Michaelmas term to March 2026! These will run during term time at 6 hours per week, running alongside full-time study. £2,500 per student is available to support their participation in this opportunity.

These internships are being made available through the Bede National HPC service, hosted by N8.

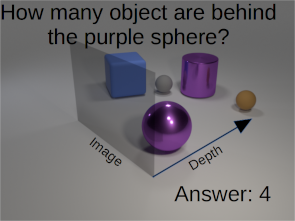

Project: HPC Benchmarking Assembly Tasks

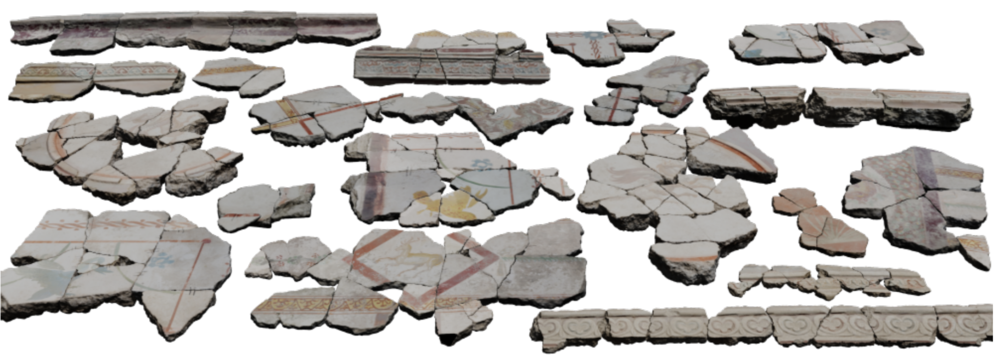

This project focuses on adapting and implementing benchmarks for performance evaluation of cluster-based assembly tasks in puzzle solving environments. This exciting opportunity combines high-performance computing with algorithmic problem-solving.

Project Components:

- Development of benchmark framework - Design and implement a comprehensive benchmarking system for assembly task evaluation

- Adaptation of existing assembly/puzzle solving algorithms - Modify and optimize current puzzle-solving approaches for cluster computing environments

- Evaluation of benchmarks across Durham clusters - Test and analyze performance across Durham's HPC infrastructure

Key Details:

- 📅 Start Date: Week commencing 20th October 2025

- ⏰ Duration: Michaelmas term to March 2026 (6 hours per week)

- 💼 Funding: £2,500 per student

- 🎓 Support: Assigned Research Technical Professional (RTP) from Advanced Research Computing

- 🤝 Additional Benefits: Opportunities to participate in community building activities and attend events

Perfect for students interested in high-performance computing, algorithm optimization, and computational problem-solving!

Skills You'll Develop:

- High-performance computing and cluster programming

- Benchmark design and performance evaluation

- Algorithm adaptation for parallel computing environments

- Experience with Durham's state-of-the-art HPC facilities

Ideal Candidate:

- Background in computer science, mathematics, or related field

- Interest in algorithms, puzzle-solving, or computational problem-solving

- Some experience with programming (Python, C++, or similar)

- Curiosity about high-performance computing and parallel processing

How to Apply:

Please submit an Expression of Interest (EOI) vie email outlining (as responses to):

- Your academic background and relevant skills

- Why you're interested in HPC benchmarking and assembly tasks

- Any previous experience with programming, algorithms, or HPC (if applicable)

EOI Application Deadline: 5pm on Wednesday 8th October 2025

EOI Submission via email: Dr Stuart James (stuart.a.james@durham.ac.uk)

Shortlisted candidates will be invited for a brief chat (online) on the 9th/10th October 2025.

Unfortunately, due to the short deadline it is not possible to talk before submitting an EOI.

WARING

Do NOT submit via the ARC form. You are expected to have an academic support which will only be provided for the selected applicant.

Selected candidate will be proposed to ARC for the deadline:

Application Deadline: 5pm on Monday 13th October 2025

Supervised by: Dr Stuart James, in collaboration with Dr Eamonn Bell and Dr Anne Reinarz.

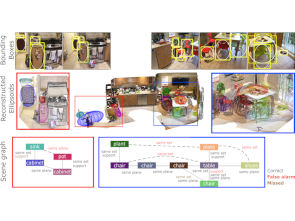

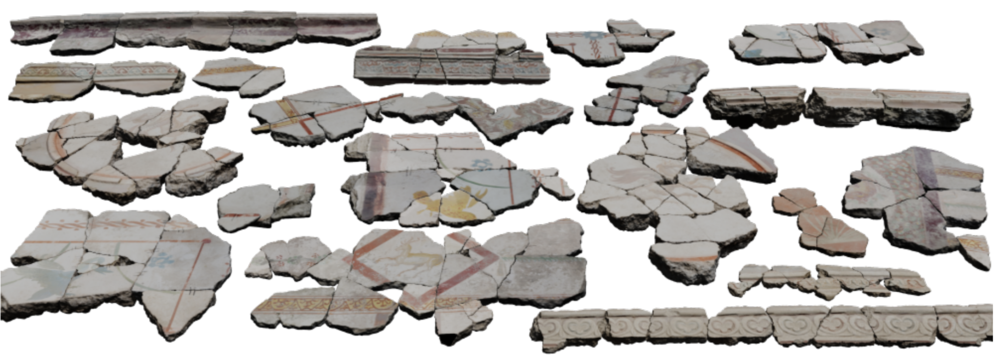

Past Success Story! Samuel Waugh (Durham Computer Science student)

This summer, Durham University Computer Science student Samuel Waugh completed a research internship with the N8 Centre of Excellence in Computationally Intensive Research (N8 CIR) under my supervision, developing ArteFact - an innovative web-based tool that helps art historians uncover meaningful connections between paintings and scholarly writing using a domain-adapted CLIP model. What impressed me most was how quickly Samuel was able to translate a complex research idea into a usable system, showing real creativity, technical skill, and careful consideration for end users.

More details at: https://stuart-james.com/research/2025/10/03/N8-CIR.html