Transferring old book image style to real world photos

26 Aug 2016

. category:

research

.

Comments

Style transfer has become a popular area of research and with public applications such as Prisma based on Neural Style Transfer [Gatys'15]. Earlier this week I wanted to answer the question does it really work for understanding the larger style and context. In contrast to [Wang'13] where they learnt an artistic stroke style, how does it compare. The British Library Flickr 1m+ dataset [BL'13] provides an interesting application of this, where there is an inherent style for transferring.

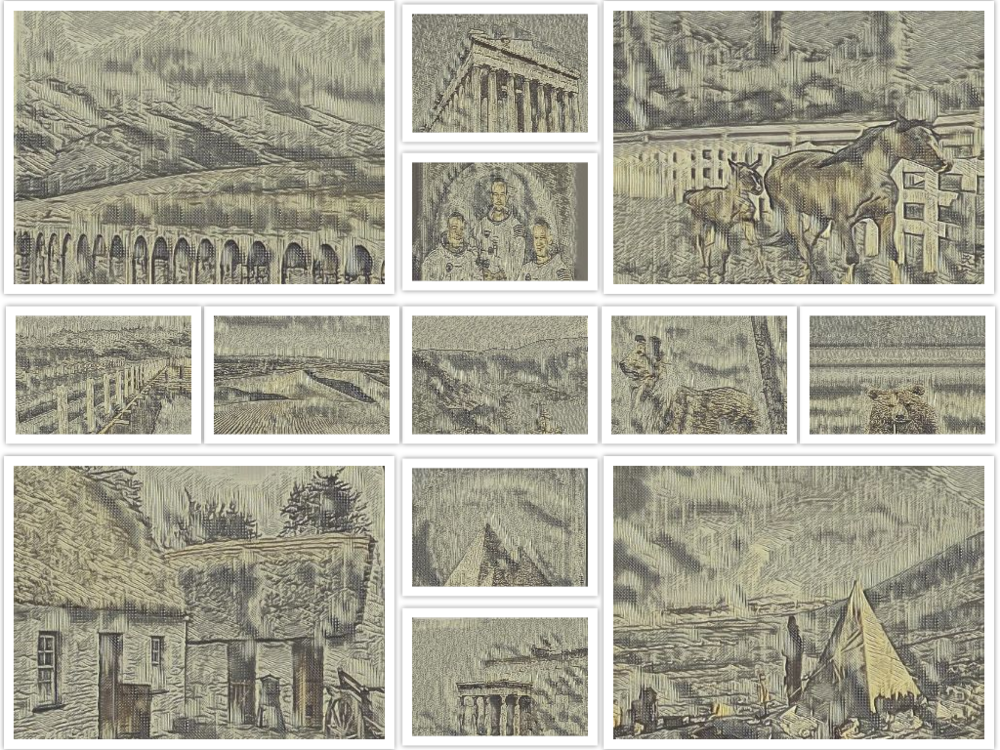

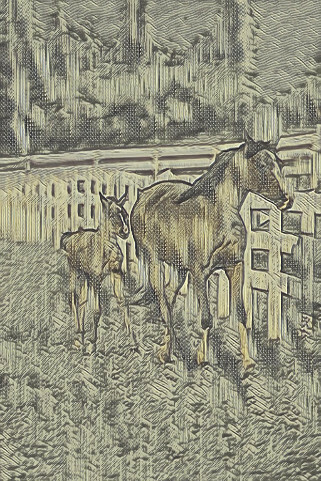

So having read the papers around this previously, I was fairly sure it would not transfer very well, but on the chance that local statistics can enforce something coherent it was worth running (and also gave me a chance to play with such networks). So by taking a few examples, these are the best results from transferring from the BL'13 to the Berkeley Segmentation Dataset (BSDS500) [BSDS500'11]

These results were achieved using the Texture Nets method [Ulyanov'16] trained on a singular example and the most visually appealing results displayed after playing with the Texture / Style weights as well as the chosen example. Code available on GitHub

What is quite interesting is if you look at this from a distance they look plausible, but it isn't till you look at one adjacent to another or zoom in you realize that these aren't actually the same style. Logical hatching patterns to describe shadows or depth are ignored, or in the cases where they are present they don't make sense.

As a mini-conclusion, style-transfer, although gaining a lot of hype has still a long way to being accurately reproduced general artistic style. Still the results are interesting and if you aren't looking for exact replication, then it is visually appealing. It must be bared in mind that the British Library dataset is challenging, where the style has been evolving for human understanding over millenniums. A problem to keep working on, possibly guided by transfer learning.

References

[Gatys'15] Leon A Gatys and Alexander S Ecker and Matthias Bethge. "A Neural Algorithm of Artistic Style". Arxiv (http://arxiv.org/abs/1508.06576). 2015.

[Wang'13] T Wang and J Collomosse and D Greig and A Hunter. "Learnable Stroke Models for Example-based Portrait Painting". Proc. British Machine Vision Conference (BMVC). 2013.

[BL'13] British Library Flickr. https://www.flickr.com/photos/britishlibrary/. 2013.

[BSDS500'11] Berkeley Segmentation Dataset. https://www2.eecs.berkeley.edu/Research/Projects/CS/vision/grouping/resources.html#bsds500. 2011

[Ulyanov'16] Dmitry Ulyanov and Vadim Lebedev and Andrea Vedaldi and Victor Lempitsky. "Texture Networks: Feed-forward Synthesis of Textures and Stylized Images. Arxiv (http://arxiv.org/abs/1603.03417). 2016.